DESIRE6G focuses on two representative 6G use cases targeting extreme key performance indicators: 1) augmented/virtual reality, and 2) digital twin industrial applications.

Use case: Intelligent and resilient VR/AR applications with perceived zero latency

Augmented Reality / Virtual Reality (AR/VR) applications for future 6G are expected to offer significant improvements in terms of overall quality of experience, latency, bandwidth, reliability, and connectivity. These improvements will enable a new generation of immersive experiences that go beyond what is possible with the current 5G technologies. The application considered in this use case is an Augmented Reality app offering a perceived zero latency immersive experience to a human user equipped with an AR/VR headset. The app will be composed of several functional modules that are placed at different locations, i.e., at the source nodes, at the edge computing nodes and at the headset.

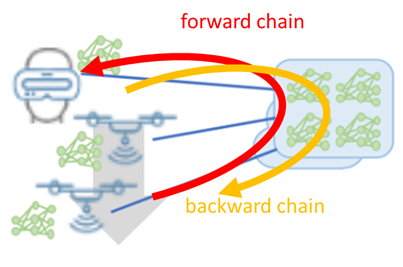

The image below shows how the application will exploit forward and backward chains to perform the communications among the functional modules.

Technical description

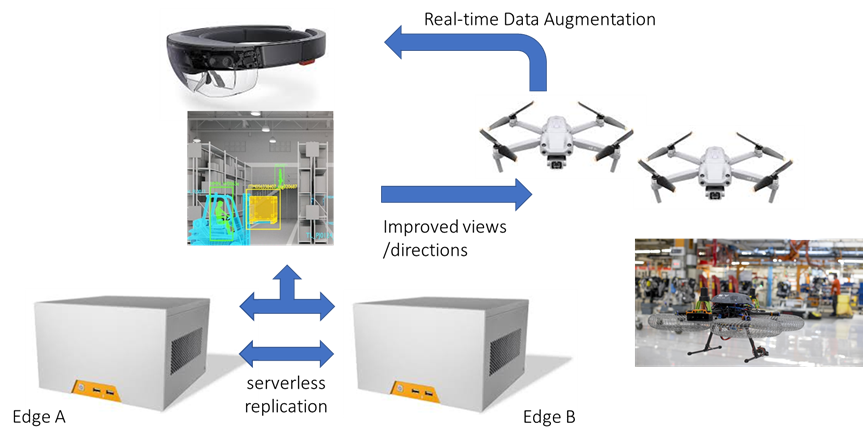

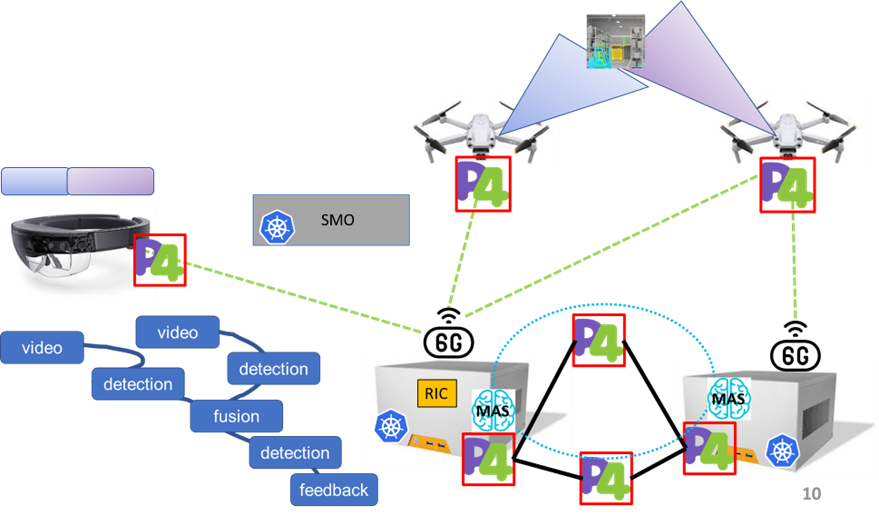

The images below show the high-level description of the application and the mapping inside the DESIRE6G architecture and components, respectively. First, the application will be instantiated as a chain of functions, relying on the serverless framework (Function as a Service) by the SMO, while network resources will be orchestrated and enforced by means of radio controllers (e.g., RIC), SDN controllers (P4 programs and entries). Then, at steady state, the pervasive telemetry framework will be activated to ensure the perceived zero latency for user experience in the case of soft failures, network events, congestions, handovers, UAV battery issues etc. To this extent, telemetry will be activated either at the terminals, in the radio segment, in the network and in the edge nodes, allowing the collection of significant and heterogeneous metrics, needed as input features for the AI-based operations. In particular, the MAS-based forecasting and detection will trigger different resiliency-targeted actions, mitigating specific critical conditions with no significant impact on the user experience. The figure on the right also shows a high-level function chain graph of the application, including the first step detection and the data/video fusion for the headset, and the final feedback commands to the cameras. Note that mobile source nodes and the headset are connected by means of wireless connectivity (e.g., 5G-beyond, WiFi6/7) and a significant percentage of latency is due to such segment.

Use case: Digital Twin

An E2E Digital Twin system for robotics is an emerging concept that integrates information and communication technologies (ICTs) to create a highly-consistent, accurate, and synchronized virtual representation of its physical counterpart, anywhere, anytime and in any conditions. However, an E2E Digital Twin system is commonly mistaken with a Digital Twin application. The later, only refers to the virtual representation of a physical robot that is used for real-time prediction, optimization, monitoring, controlling, and improved decision-making. In turn, the E2E Digital Twin system, which is being pushed by Industry 5.0, continuously orchestrates, manages and controls the complete system that includes the physical robot, its virtual replica but also the available computation and communication infrastructures throughout its entire lifecycle. Moreover, since Industry~5.0 brings several opportunities to collaborative robots, Industrial E2E Digital Twin systems will not comprise a single robotic twin but an entire industrial environment twin, unifying its view on robots, human-personal, and all the existing processes.

The use case will benefit from the E2E Digital Twin system across the Device-Edge-Cloud continuum in a balance between computing, storage, and networking requirements of each virtual function, departing from centralized Edge solutions – which are not suitable for supporting extremely low latency. The robots will be controlled in real-time, remotely by a virtual remote controller or fully autonomous algorithms that reside in the Edge as part of the Digital Twin application. Robot sensor information (e.g., lidar, camera, odometry, joint states) will be sent upstream to update the virtual models in real-time, while control instructions about the robot pose will be sent downstream to navigate the robots. In operational Digital Twins, the end-to-end control loop latency budget (time delay between data being generated from the sensors and control instruction being correctly received by the actuator) is spent on the processing time of the data received by the sensors; the remaining part of the latency budget limits the communication time to few milliseconds. The in-network acceleration and optimization together with the E2E data plane programmability that is offered by DESIRE6G will guarantee the strict KPIs of operational digital twins mainly related to reliability and low latency. In addition, this use case will benefit from the DESIRE6G E2E service orchestration to enable the granular use case life-cycle management with minimum resource consumption and maximum energy efficiency.

Technical description

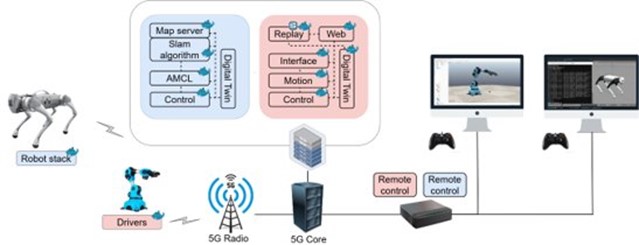

The Digital Twin use case shown above is designed to be provided as a network service. It envisages a set of robots that are remotely controlled, coordinated and monitored to perform different tasks. Such an example is foreseen to enhance existing robotic systems with Digital Twins capabilities to optimize the operation of the robots by e.g. eliminating defective pieces and repetitively performing accurate tasks at high-speed. The Digital Twin application that is composed of the robot and the virtual replica is composed of different functions (shown in red and blue) can be deployed on the robot or offloaded in the Edge/Cloud.